Neoteny in the Growth of Software Flexibility and Power

Neoteny is a biological phenomenon of an organism’s development observed across multiple generations of a species. According to Wikipedia, neoteny is “the retention, by adults in a species, of traits previously seen only in juveniles”, and accounts for many evolutionary shifts, including the human brain’s ability to remain elastic and malleable later in life than those of our distant ancestors.

So how does this relate to software? Software is a great deal like an organic species. The species emerged (not long ago), incubated in a more or less fragile state for a number of decades, and continues to evolve today. Each software application or system built is a new member of the species, and over the generations they have become more robust, intelligent, and useful. We’ve even formed a symbiotic relationship with software.

Consider the fact that software running on computers was at one time compiled to machine language code for a specific processor. With the invention of platform-independent instruction sets and their associated runtimes performing just-in-time compilation (Java’s JVM and .NET Framework’s CLR), we’ve delayed the actual production of machine language code until it’s actually needed on the target machine. The compiler produces a slightly more abstract representation of the program logic, and an extra translation step at installation or runtime is needed to complete the process to make the software usable.

With the growing popularity of dynamic languages such as Lisp, Python, and the .NET Framework’s upcoming release of its Dynamic Language Runtime (DLR), we’re taking another step of neoteny. Instead of a compiler generating instruction byte codes, a “compiler for any dynamic language implemented on top of the DLR has to generate DLR abstract trees, and hand it over to the DLR libraries” (per Wikipedia). These abstract syntax trees (AST), normally an intermediate artifact created deep within the bowels of a traditional compiler (and eventually discarded), are now persisted as compiler output.

Traits previously seen only in juveniles… now retained by adults. Not too much of a metaphorical stretch! The question is: how far can we go? And I think the answer depends on the ability of hardware to support the additional “just in time” processing that needs to occur, executing more of the compiler’s tail-end tasks within the execution runtime itself, providing programming languages with greater flexibility and power until the compilation stages we currently execute at design-time almost entirely disappear (to be replaced, perhaps, by new pre-processing tasks.)

I remember my Turbo Pascal compiler running on a 33 MHz processor with 1 MB of RAM, and now my cell phone runs at 620 MHz (with a graphics accelerator) and has gigabytes of memory and storage. And yet with the state of things today, the inclusion of language-specific compilers within the runtime is still quite infeasible. In the .NET Framework, there are too many potential languages that people might attempt to include in such a runtime: C#, F#, VB, Boo, IronPython, etc. Trying to cram all of those compilers into a universal runtime that would fit (and perform well) on a cell phone or other mobile device isn’t yet feasible, which is why we have technologies with approaches like System.Reflection.Emit (on the full .NET Framework), and Mono.Cecil (which works on Compact Framework as well). These work at the platform-independent CIL level, and so can interpret and generate programs generically, interact with each others’ components, and so on. One metaprogramming mechanism can therefore be reused across all .NET languages, and this metalinguistic programming trend is being discussed on the C# and other language design teams.

I’ve just started using Mono.Cecil, chosen because it is cross-platform friendly (and open source). The API isn’t very intuitive, but because the source is available, and because extension methods can go a long way to making it more accessible, it’s a great option. The documentation is sparse, and assembly generation has some performance issues, but it’s a work-in-progress with tremendous potential. If you’re doing any kind of static analysis or have any need to dynamically generate and consume types and assemblies (to get around language limitations, for example), I’d encourage you to check it out. A comparison of Mono.Cecil to System.Reflection can be found here. Another library called LinFu, which performs lots of mind-bending magic and actually uses Mono.Cecil, is also worth exploring.

VB10 will supposedly be moving to the DLR to become a truly dynamic language, which considering their history of support for late binding, makes a lot of sense. With a dynamic language person on the C# 4.0 team (Jim Hugunin from IronPython), one wonders if C# won’t eventually go the same route, while keeping its strongly-typed feel and IDE feedback mechanisms. You might laugh at the idea of C# supporting late binding (dynamic lookup), but this is being planned regardless of the language being static or dynamic.

As the DLR evolves, performance optimizations are being discovered and implemented that may close the gap between pre-compiled and dynamically interpreted languages. Combine this with manageable concurrent execution, and the advantages we normally attribute to static languages may soon disappear altogether.

The Precipitous Growth of Software System Complexity

We’re truly on the cusp of a precipitous period of growth for software complexity, as an exploding array of devices and diverse platforms around the world connect in an ever-more immersive Internet. Taking full advantage of parallel and distributed computing environments by solving the challenges of concurrency and coordination, as well as following the trend toward increased integration among software components, is pushing software complexity into new orders of magnitude. The strategies we come up with for organizing these systems will have to take several key factors into consideration, and we will have to raise the level of abstraction to a point that may be hard for us to imagine with our existing tools and languages.

One aspect that’s clear is the rise of declarative or intention-based syntax, whether represented as XML, Domain Specific Langauges (DSL), attribute decoration, or a suite of new visual modeling editors. This is in part a consequence of raising the abstraction level, as lower-level libraries are entrusted to solve common problems and take advantage of common opportunities.

Another is the use of Inversion of Control (IoC) containers and dependency injection in component based architectures, thereby standardizing the lifecycle of the application and its components, and providing a common environment or ecosystem for all of its components, as well as introducing a common protocol for component location, creation, access, and disposal. This level of consistency is valuable for sharing a common understanding of how to troubleshoot software components. The more predictable a component’s interaction with the rest of the system, the easier it is to debug and modify; conversely, the more unique it and its communication system is, the more disparity there is among components, and the more difficult to understand and modify without introducing errors. If software is a species and applications are individuals, then components are the cells of a system.

Even the introduction of functional programming languages into the mainstream over the past couple years is due, in part, to the ability of those languages to provide more declarative support, more syntactic flexibility, and new ways of dealing with concurrency and coordination issues (such as immutable values) and light-weight, ad hoc data structures (tuples).

Balancing the Forces of Coupling, Cohesion, and Modularity

On a fundamental level, the more that components are independent, the less coupled and the more modular and flexible they are. But the more they can communicate with and are allowed to benefit from each other, the more interdependent they become. This adds to cohesiveness and synergy, but also stronger coupling to a community of abstractions.

A composition of services has layers and segments of interdependence, and while there are dependencies, these should be dependencies on abstractions (interfaces and not implementations). Since there will be at least one implementation of each service, and the extensibility exists to build others as needed, dependency is only a liability when the means for fulfilling it are not extensible. Both sides of a contract need to be fulfilled regardless; service-oriented or component-based designs merely provide a mechanism for each side to implement and fulfill its part of the contract, and ideally the system also provides a discovery mechanism for the service provider to publish its availability for other components to discover and consume it.

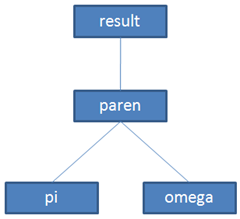

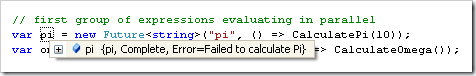

If you think about software components as a hierarchy or tree of services, with services of one layer depending on more root services, it’s easy to see how this simplifies the perpetual task of adding new and revising existing functionality. You’re essentially editing an outline, and you have opportunities to move services around, reorganize dependencies easily, and have many of the details of the software’s complexity absorbed into this easy-to-use outline structure (and its supporting infrastructure). Systems of arbitrary complexity become feasible, and then relatively routine. There’s a somewhat steep learning curve to get to this point, but once you’ve crossed it, your opportunities extend endlessly for no additional mental cost. At least not in terms of how to compose your system out of individual parts.

Absorbing Complexity into Frameworks

The final thing I want to mention is that a rise in overall complexity doesn’t mean that the job of software developers necessarily has to become more difficult than it is currently. With the proper design of components that abstract away the complexity into reusable frameworks with intuitive interfaces, developers at the business logic level don’t need to be aware of the inner complexity, in the same way that software developers are largely absolved of the responsibility of thinking about the processor’s inner workings. As we build our technology stack higher and higher, like the famed Tower of Babel, we must make sure that it’s organized and structured in a way to support that upward growth and the load imposed upon it… so it doesn’t come crashing down.

The requirements for building components tomorrow will not be the same as they were yesterday. As illustrated in this account of the effort involved in a feature change at Microsoft, in the future, we will also want to consider issues such as tool-assisted refactorability (and patterns that frustrate this, such as “magic strings”), and due to an explosion of component libraries, discoverability of types, members, and their use.

A processor can handle any complexity of instruction and data flow. The trick is in organizing all of this in a way that other developers can understand and work with.