A Scorching Hot Market

The smart phone market is the hottest market in the computer world, based on unbelievable growth. It surpasses the desktop “personal computer”, the PC, in finding its way into the pockets and hands of consumers who might not otherwise buy a larger computer; and in doing so, has established itself as the ultimate personal computer.

It’s like filling a ditch with large boulders until no more will fit, and then filling the remaining space with smaller debris. Smart phones, and all cell phones to an even larger extent, are that debris. And in the future, we’ll fill what space is left with granules of sand.

Looking at the Windows Phone from every angle, from features to development patterns, from its role in the market to its potential in peer-to-peer and cloud computing scenarios, overall I have to admit I am very impressed and quite excited. I also have some harsh criticism; and because of my excitement and optimism, strong hope that these concerns will be addressed as matters of great urgency. If Microsoft is serious about competing with Android and iPhone, they’ll have to invest heavily in doing what they’ve been so good at: giving developers what they want.

The Consumer Experience: Entertainment

Microsoft’s primary focus is on the consumer experience, and I think that focus is aptly set. But that’s the goal, and supporting developers is the means to accomplish that. You can’t separate one from the other. However, you must always prioritize; and while features like background thread execution has many solid business cases, it has to wait for consumer experience features to be refined and reliability guarantees to be worked out.

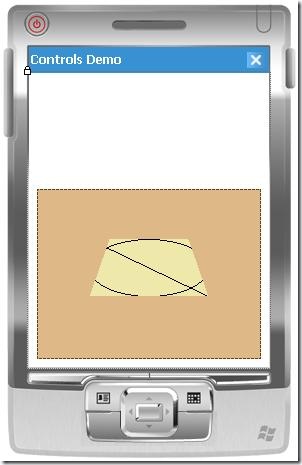

What this means is that Microsoft is paying much more attention to Design with a capital D. They’re taking tight control over the operating system’s shell UI in order to provide consistency across devices and carriers –taking a lesson from Android, which is painfully fragmented—and have announced some exciting new controls such as TiltContentControl, Panorama and Pivot.

By focusing on the consumer, Microsoft is in essence really just reminding themselves not to think like a company for enterprise customers. Let’s face it: Microsoft is first and foremost an enterprise products company. But by integrating XNA and Xbox Live into the Windows Phone platform, they’re creating a phone with a ton of gamer–and therefore non-enterprise–buzz.

Entertainment is Windows Phone’s number one priority. Don’t worry: the enterprise capabilities you want will come: as services in the OS or as apps that Microsoft and third-parties publish. But those enterprise scenarios will be even more valuable as the entertaining nature of the device puts it into millions and millions of hands.

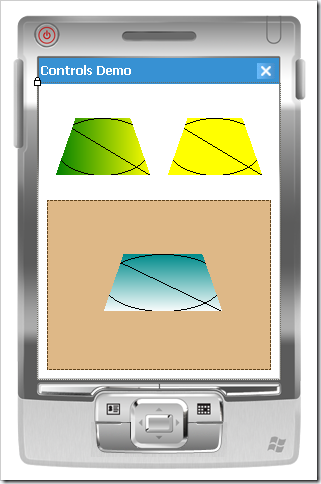

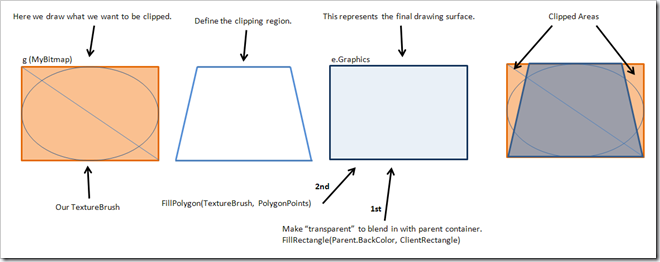

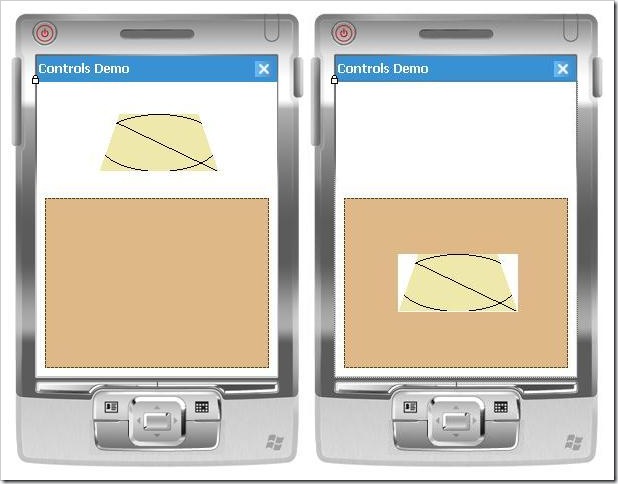

Introducing Funderbolt Games

To take advantage of this explosion of interest in smart phones and gaming, and having developed mobile device software for the past five years, I’ve recently started a company called Funderbolt Games. We’re developing games (initially targeting Windows Phone and Android) for children from about one to five years old, and will eventually publish casual games for adults as well.

I’ve been working with a fantastic artist, Shannon Lazcano, in building our first Silverlight game for Windows Phone. It will be a simple adventure game with lots of places and activities to explore as a family of mice interact with a rabbit, a dog, a frog, some fireflies, dragonflies, and more. Based on heaps of research of kids games on the iPhone, and having watched young children play most of the games in the App Store over the past two years, the games we’re creating are guaranteed to keep young kids entertained for dozens of hours.

In the next few weeks, I’ll publish more details and some screen shots. The target for our first title is October 27th (PDC 2010).

The Shell User Interface

The Android operating system’s openness has become one of its weaknesses. Shell UI replacements like Motorola’s Blur and HTC’s Sense are modifications of the operating system itself. When a new version of Android is released, these manufacturer’s take their sweet time making the necessary adaptations with their custom front ends, and customers end up several versions behind–much to their chagrin.

It would be much better to write shell replacements as loosely coupled applications or services, and design the OS to make this easy. I completely support companies innovating in the shell UI space! We need to encourage more of this, not to lock them out. These experiments advance the state of the art in user experience design and provide users with more options. If anything, the only rule coming from the platform should be that nobody shall prevent users from changing shells.

Because the smartphone is such a personal device, it makes sense that different modes of interaction might rely in part on personal taste. Some users are simply more technical than others (developers), or need to be shielded from content as well as messing things up (children), and there are people with various disabilities to consider as well. One UI to rule them all can’t possibly be the right approach.

Despite this, it’s really not a shock then that Windows Phone 7 will have a locked down shell UI in its first and probably second versions. Flexibility and choice sound good in theory, but in reality can create quite a mess when done without careful planning, and the Windows Phone team has a substantial challenge in figuring out how to open things up without producing or encouraging many of the same problems they see elsewhere. I’ll be eagerly watching this drama unfold over the first year that Windows Phones are in the wild.

That being said, I have to admit I’m not a fan of the Windows Phone 7 Start Menu. It feels disappointingly flat and imbalanced, wasting a good bit of screen real estate to the right of the tile buttons. I’m glad they attempted something different, but I think they have a long way to go toward making it that sexy at-a-glance dashboard that would inspire any onlooker to go wide-eyed with envy.

The demo videos of Office applications are likewise drab. Don’t get me wrong: the fonts, as everyone is quick to point out, are beautiful, and we all love the parallax scrolling effects. But we’ve come to expect and appreciate some chrome: those color gradients and rounded corners and other interesting geometrical shapes that help to define a sense of visual structure. As noted by several tweeple, it sometimes looks like a hearkening back to ye olden days of text-only displays. More beautiful text, but nothing beyond that to suggest that we’ve evolved beyond the printing press.

The one line I keep hearing over and over again is that Microsoft is serious about keeping an aggressive development cycle, revving Windows Phone quickly to catch up to their competitors. The sting of Windows Mobile’s abysmal failure is still fresh enough to serve as an excellent motivator to make the right decisions and investments, and do well by consumers this time.

A Managed World

There are a few brilliant features of Windows Phone 7. One of them is the requirement for a hardware “Navigate Back” button. As a Windows Mobile developer working within the constraints of small screens, I never had enough room, and sacrificing space on nearly every screen for a back button was painful. Not only did you have to give up space, you also had to make it fit your application’s style. Windows Forms with the Compact Framework (Rest In Peace) was not fun to work with.

We’re entering a new era. The Windows Phone will only allow third party applications to be written in managed code. I couldn’t imagine any better news! Why? We don’t have to concern ourselves with PInvoke or trying to interoperate with COM objects. We also don’t have to worry about memory leaks or buffer overruns: the garbage collection and strong-type system in the CLR takes care of these. Not only do I not have to worry about these problems as a .NET developer, I also don’t have to worry about my app being negatively impacted by an unmanaged app on the same device, and I don’t have to worry about these problems emerging on my own personal phone despite what apps are installed.

I’ve been plagued by my iPhone lately because of unstable apps, especially since I upgraded to iOS 4.x. I can’t tell you how many times that an app dies because it’s just so damn frequent, and my frustration level is rising. The quality of Apple’s own apps are just as bad: not only does iTunes sync not work correctly on a PC, but I can’t listen to my podcasts through the iPod app without finding that I can’t play a podcast I downloaded (but I can stream it), or that playback works for a while before it starts to stutter the audio and locks up my whole phone. These are signs of a fragile platform and an immature, unmanaged execution environment.

I used to be a technology apologist, but I find myself increasingly critical when it relates to my smart phone. I demand reliability. Forcing applications to use managed code is an excellent way to do that. Once your code is wrapped in such a layer, the wrapper itself can evolve to provide continuously improving reliability and performance.

If you have significant investments in unmanaged code for Windows Mobile devices, it’s too late for sympathy. The .NET platform is over a decade old, and managed execution environments have clearly been the future path for a long time now. You’ve had your chance to convert your code many times over. If you haven’t done so yet and you still think your old algorithms and workflows are valuable, it’s time to get on board and start porting. Pressuring Microsoft to open up Windows Phone to unmanaged code is a recipe for continued instability and ultimately disaster for the platform.

In fact, I would consider the Windows Phone 7 OS to be one step closer to an OS like Singularity, which is a research operating system written in managed code. As some pundits have predicted or recommended that Apple extrapolate iOS to the desktop and eventually drop OSX, we might see a merge of desktop and mobile OS technologies at Microsoft, or at least a borrowing of ideas, to move us closer to a Singularity-like OS whose purpose is improving the reliability of personal computing.

A Bazillion Useless Apps

There are a ton of crappy apps in the Apple App Store and the Android Marketplace. I’d say it’s a pretty heavy majority. Perhaps they shouldn’t be boasting how many apps they have, as if quality and quantity were somehow related. I have a feeling that Microsoft’s much stronger developer platform will produce a higher signal-to-noise ratio in their own marketplace, and I’ll explain why.

Web developers spend their time attempting cross-browser and cross-version support, Apple developers spend their time tracking down bugs that prevents even basic functionality from working well, and Android developers spend their time trying to support a fragmented collection of phone form factors, screen sizes, and graphics processors. I suspect Microsoft developers will find a sweet spot in being able to spend the majority of their time building the actual features that have business or entertainment value.

Why? There are only two supported screen resolutions for Windows Phone 7, and the nice list of hardware requirements give developers a strong common foundation they can count on (while still leaving room for innovation above and beyond that), providing the same kind of consistent platform that Apple developers enjoy. In addition, the operating system will be updated over the air, so all connected devices will run the same version. Without OS modifications like shell UI replacements that can delay that version’s readiness, there’s greater consistency and therefore less friction.

These factors don’t account for problems that can still result from sloppy programming and low standards, but fewer obstacles will remain to building high quality applications. A rich ecosystem of Silverlight and XNA control libraries, frameworks, and tooling already exists. With incredible debugging tools that further help to improve quality, my bet is that we’ll see much more focus on valuable feature development.

PDC 2010

I’m one of the lucky 1000 developers going to the Professional Developer Conference this year at Microsoft’s Campus in Redmond, WA from October 27-29. I’m looking forward to learning more about the platform and hope to get my hands on one. I’m also working on two Windows Azure projects, a big one for a consulting project and a personal one which will be accessed through a Windows Phone app, so I’m excited to catch some of the Azure sessions and meet their team as well.

If you’re also headed to PDC and are interested in meeting up while in Redmond/Seattle, leave me a comment! I’m always interested to hear and share development and technology ideas.