This is not the final name. But it will be a useful product. With as much as I’ve been working with rapidly-evolving mobile database schemas lately, I expect to save from 30 minutes to an hour a day in my frequent build-deploy-test cycles. The lack of a good tool for mobile device database queries causes me a lot of grief. I know Visual Studio 2008 has something built-in to connect to mobile devices over ActiveSync, but let’s face it: ActiveSync has been a real pain in the arse, and more often fails than works (my next blog will detail some of those errors). I can only connect to one device at a time, and I lose that connection frequently (meanwhile, SOTI Pocket Controller continues to work and communicate effectively). Plus I have a window constantly bugging me to create an ActiveSync association.

I work on enteprise systems using sometimes hundreds of Windows Mobile devices on a network. So I don’t want to create an association on each one of those, and getting ActiveSync to work over wireless requires an association, as far as I know.

Pocket Controller or other screen-sharing tools can be used to view the mobile device, and run Query Analyzer in QVGA from the desktop, but my queries get big and ugly, and even the normal-looking ones don’t fit very well on such as small screen. Plus Query Analyzer on PDAs is very sparse, with few of the features that most of us have grown accustomed to in our tools. Is Pocket Query Analyzer where you want to be doing some hardcore query building or troubleshooting?

So what would a convenient, time-saving, full-featured mobile database query tool look like? How could it save us time? First, all of the basics would have to be there. Loading and saving query files, syntax color coding, executing queries, and displaying the response in a familiar “Query Analyzer”/”Management Studio” UI design. I want to highlight a few lines of SQL and press F5 to run it, and I expect others have that instinct as well. I also want to be able to view connected devices, and to use several tabs for queries, and to know exactly which device and database the active query window corresponds to. No hunting and searching for this information. It should also have the ability to easily write new providers for different database engines (or different versions of them).

Second, integration. It should integrate into my development environments, Visual Studio 2005 & 2008. It should also give an integrated list of databases, working with normal SQL Server databases as well as mobile servers. If we have a nice extensible tool for querying our data, why limit it to Windows Mobile databases?

Sometimes it’s the little details, the micro-behaviors and features, the nuances of the API and data model, that defines the style and usefulness of a product. I’ve been paying a lot of attention to these little gestures, features, and semantics, and I’m aiming for a very smooth experience. I’m curious to know what happens when we remove all the unnecessary friction in our development workflows (when our brains are free to define solutions as fast as we can envision them).

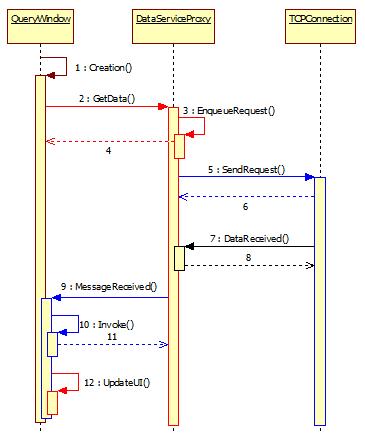

Third, an appreciation for and focus on performance. Instead of waiting for the entire result to return before marshalling the data back to the client, why not stream it across as it’s read? — several rows at a time. Users could get nearly instantaneous feedback to their queries, even if the query takes a while to come fully across the wire. Binary serialization should be used for best performance, and is on the roadmap, but that’s coming after v1.0, after I decide to build vs. buy that piece.

Finally, a highly-extensible architecture that creates the opportunity for additional functionality (and therefore product longevity). The most exciting part of this project is probably not the query tool itself, but the Device Explorer window, the auto-discovering composite assets it visualizes, and the ability to remotely fetch asset objects and execute commands on them.

The Device identifies (broadcasts) itself and can be interrogated for its assets, which are hierarchically composed to represent what is visualized as an asset tree. One Device might have some Database assets and some Folder and File assets. The Database assets will contain a collection of Table assets, which will contain DatabaseRow and DatabaseColumn assets, etc. In this way, the whole inventory of objects on the device that can be interrogated, discovered, and manipulated in a standard way that makes inherent sense to the human brain. RegistryEntry, VideoCamera, whatever you want a handle to.

This involves writing “wrapper” classes (facades or proxies) for each kind of asset, along with the code to manipualte it locally. Because the asset classes are proxies or pointers to the actual thing, and because they inherit from a base class that handles serialization, persistence, data binding, etc., they automatically support being remoted across the network, from any node to any other node. Asset objects are retrieved in a lazy-load fashion: when a client interrogates the device, it actually interrogates the Device object. From there it can request child assets, which may fetch them from the remote device at that time, or use its locally-cached copies. If a client already knows about a remote asset, it can connect to and manipulate it directly (as long as the remote device is online).

With a remoting framework that makes shuffling objects around natural, much less message parsing and interpretation code needs to be written. Normal validation and replication collision logic can be written in the same classes that define the persistent schema.

So what about services? Where are the protocols defined? Assets and Services have an orthagonal relationship, so I think that Services should still exist as Service classes, but each service could provide a set of extension methods to extend the Asset classes. That way, if you add a reference to ServiceX, you will have the ability to access a member Asset.ServiceXMember (like Device.Databases, which would call a method in MobileQueryService). If this works out the way I expect, this will be my first real use of extension methods. (I have ideas to extend string and other simple classes for parsing, etc., of course, but not as an extension to something else I own the code for.) In the linguistic way that I’m using to visualize this: Services = Verbs, Assets = Nouns. Extension methods are the sticky tape between Nouns and Verbs.

public static AssetCollection<Database> Databases(this Device device) { }

With an ability to effortlessly and remotely drill into the assets in a mobile device (or any computer, for that matter), and the ability to manipulate them through a simple object model, I expect to be a significantly productive platform on which to build. Commands executed against those assets could be scripted for automatic software updates, they could be queued for guaranteed delivery, or they could be supplemented with new commands in plug-in modules that aid in debugging, diagnostics, runtime statistics gathering, monitoring, synchronizing the device time with a server, capturing video or images, delivering software updates, etc.

And if the collection of assets can grow, so can UI components such as context menu items, document windows, and so on, extending and adding to the usefulness of the Device Explorer window. By defining UI components as UserControls and defining my own Command invocation mechanism, they can be hosted in Visual Studio or used outside of that with just a few adjustments.

More details to come.